Deep learning is not as complex a concept that non-science people often happen to decipher. Scientific evolution over the years have reached a stage where a lot of explorations and defined research work needs the assistance of artificial intelligence. Since machines are usually fed with a particular set of algorithms to understand and react to various tasks within a matter of seconds, working with them broadens the scope of scientific breakthroughs resulting in the invention of techniques and procedures that make human life simpler and enriching. However, in order to work with machines, it is important for them to understand and recognize things just the way the human brain does. For example, we may recognize an apple through its shape and colour. For a robot to go through the same cognitive process, it must be fed with programming structures to recognize the same. This is termed as machine learning and deep learning is basically a highly revered and practiced branch of machine learning.

So, what is the scope and utility of deep learning?

In a few words, deep learning can be called a one-stop solution to several advanced scientific creations. A representation or simply an object can be understood in many ways. To simplify the learning task by a machine, the objects can be analyzed and set into an abstract setups comprising of shapes and edges and pixels. This way of analytical breaking down of an object into its most easy-to-understand state, rules out the complete need for handcrafted features or elaborate programming techniques. Thus, your computer or robot can learn the things you want it to, without your supervision and intensive involvement.

The neural network techniques

For those who have already started to get involved, it is not difficult to guess that the field takes its inspiration from the human brain and how different biological processes and responses are regulated by it. The basic structure of every human brain and how it relates and co-related images and situations to setup a set of instructions that help us through the daily cognitive process, stems from the neural network. Neurons or nerve cells attached to each other send out electric impulses which are basis of all bodily communication. In a similar pattern, thousands and thousands of neurons contribute something together as well as individually to add to a person’s imaging, understanding and reasoning abilities. In case of machines, this very mechanism is achieved through algorithms.

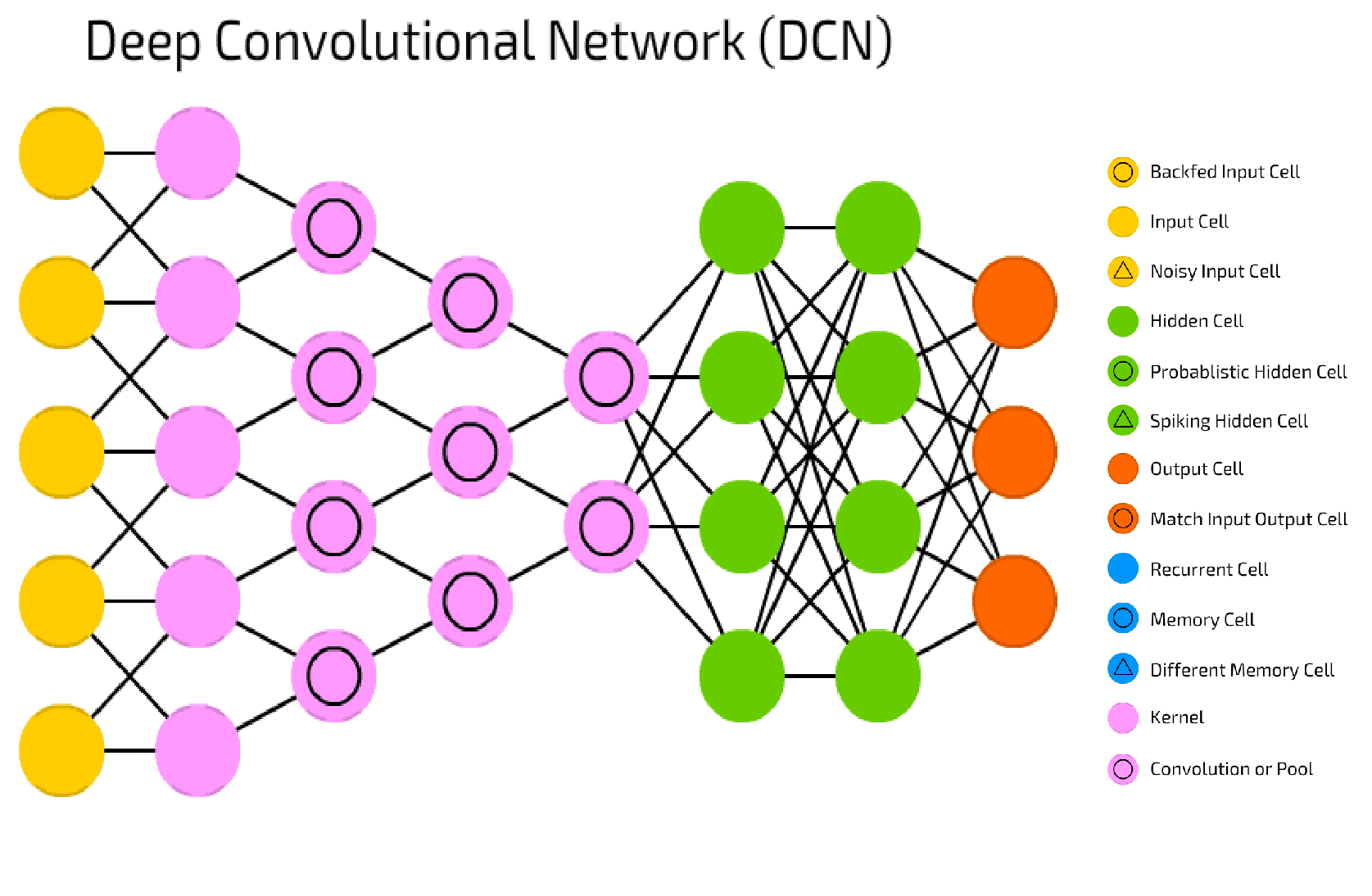

It is necessary to know the various neural types that have found great impact in various commercial R&D field. Talking of neural networks, it is imperative that we mention about neural networks that have a more complicated structure and have a wide variety of utilities in various industries based on scientific research and discoveries. This is where we come across Deep Convolutional networks or DCN. It comprises of one or more layers and is generally designed in a way to take advantage of the 2D structure of a unit. They have learnable weights and thus are very easy to train. They are inspired by the biological setup of animals (especially humans) where individual neurons of the animal cortex are arranged in such a way to intercept the visual field with maximum potential. The designs of modern convolutional neural networks are diverse and certain patterns have also developed distinguishing features over time. LeNet-5, a pioneering 7-level convolutional network by Yann LeCun finds its uses in banks to recognize hand-written numbers on checks in the form of digitalized 32 x 32 pixel images.

Deep Convolutional Neural Network

Usecase:

A very simple usecase of DCN is handwriting recognition and converting image to text. A large retailer in Australia with the help of a startup is using the DCN to convert competition price points labels to text data for analysis.

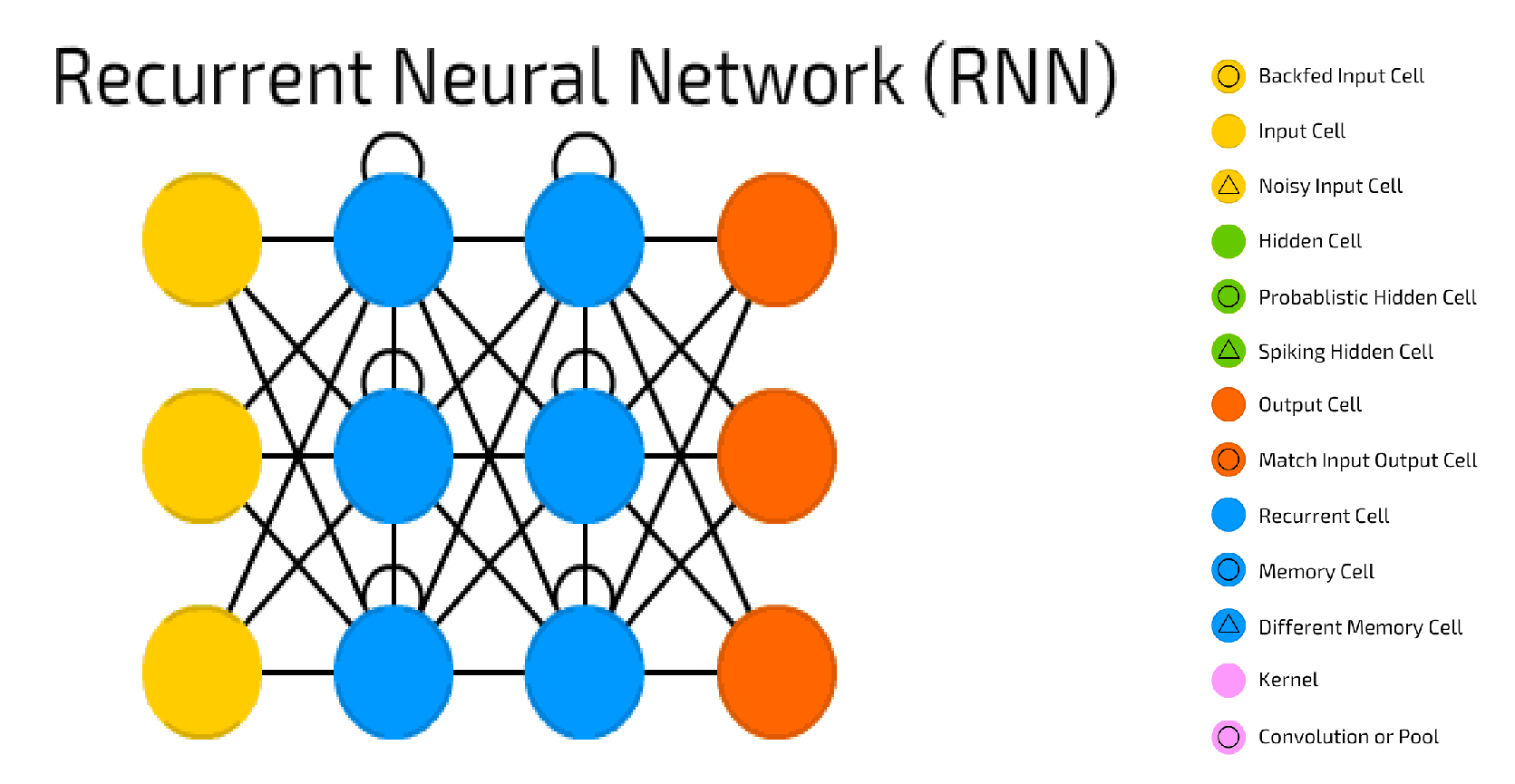

Recurrent Neural Network or RNN is another type of artificial network where several units are connected to form a directed cycle. However, this network and its multiple designs had to go through changes in designs, one of the major modifications being the construction of the neural history compressor. Here, the neural scheme at a current input level has the potential to predict the next from the previously existing data. Thus higher level RNN also know a compressed representation of the RNN provided below. With the incoming data sequence having some sort of learnable predictability, it thus becomes easier to solve complicated sequence related problems. In 1993, scientists managed to conduct a deep learning exercise comprising of more than 1000 layers, with their results arrive at the half the anticipated time.

Recurrent Neural Network

For structured predictions over variable length units, we have Recurrent Neural Network which is a deep neural network that is created by the imposition of a set of weights recursively over a single structure. By traversing a given structure in a distinguished topological order, things like scalar predictions are possible. Thus, these networks can understand deep structured information.

Usecase:

A very good usecase of RNN is its utilization in predicting next word in a conversation as part of natural language processing. Bots use this technique extensively to have a conversation with humans and will lead the way for unstructured predictions going forward.

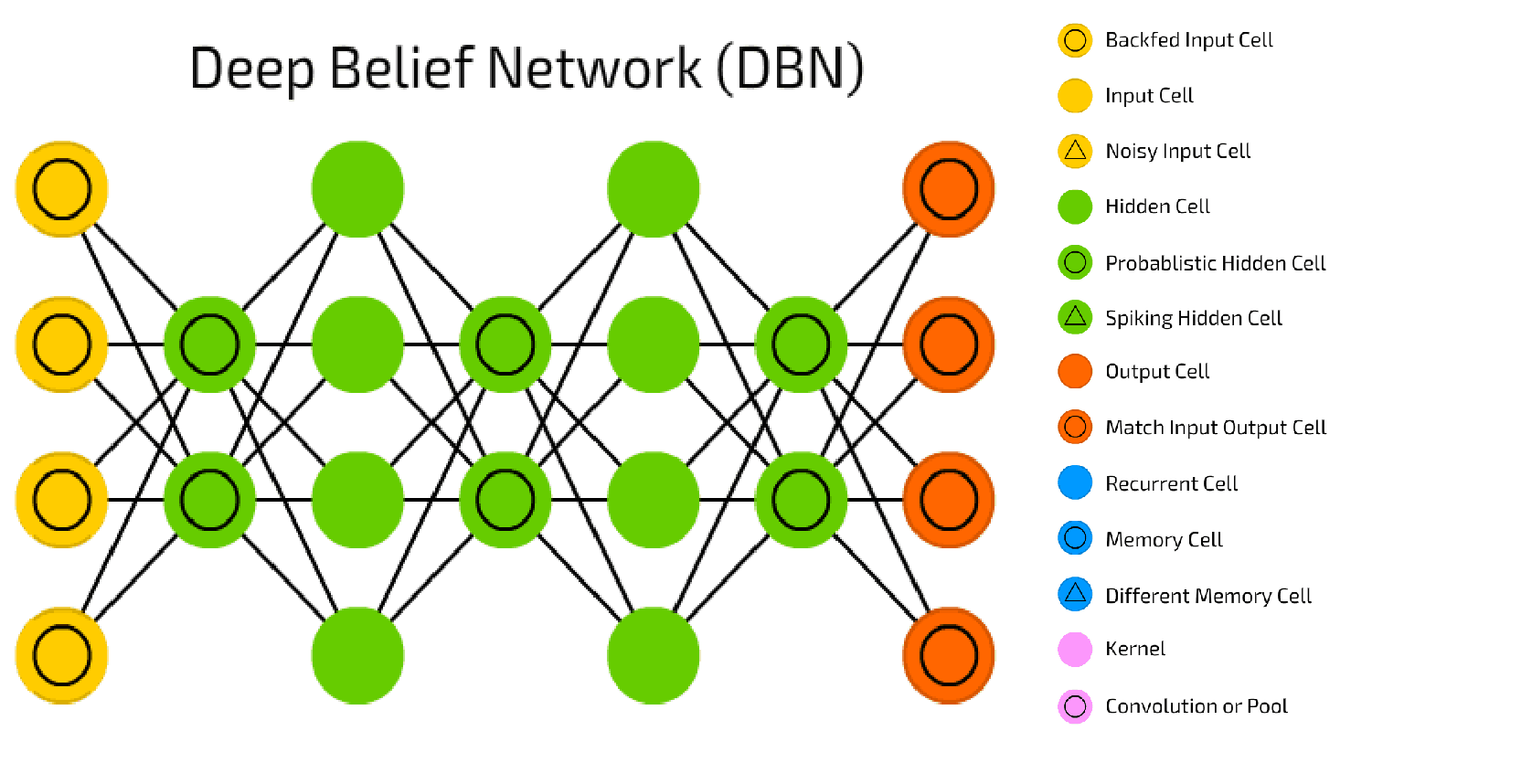

Another multi-layer neural network is the DBN or the Deep Belief Network which comprises of multiple layers of latent variables. Apparently, the layers have got connections but not with every unit in the layers. They are programmed in a way such that they can work as feature detectors on inputs. With greater progression and updation, DBNs can be made to perform classifications.

Deep Belief Neural Network

Usecase:

A very good usecase of DBN is its use in facial recognition which is becoming a huge play in the security industry, where CCTV footage is being used to identify persons of interest in real-time. This technology is being extensively used by security services around the world to monitor terror modules. Infact, NASA is using DBN to classify terabytes of high-resolution, highly diverse (due to collection, pre-processing, filtering, etc.) satellite imagery. For instance, instead of a face in a crowd of many of thousands, imagine a very small spot in the galaxy exhibiting certain characteristics.

So, can these artificial networks perform such diverse functions, such as the human brain? Well, researches and studies pursued by scholars in the area see an era of artificial super-intelligence dawning, where this might just be possible!

Methodology and utilities

To understand the methodology behind artificial neural networks, it is very important to trace the history of its growth. The model that initially paved the way for neural network research was created in the form of a computational model that were based on algorithms and mathematics. In the later years, psychologists like Donald Hebb work on the same format to work up a hypothesis based on neural plasticity. It is designed as an unsupervised learning rule that developed later variants of higher potentials. However, nothing is the likes of multi-layer neural networks that could perform a variety of functions, were seen in sight before the back proportion algorithm was invented and brought into practice before 1975. Artificial intelligence began to grow at a steady rate, soon after.

The utility of such a learning technique sees its relevance in deep architecture for building machine memory. The algorithms presented in the learning technique, are essentially abstracted representations of regular ideas and objects. The best part about these abstract representations is that even if changes in the input data are carried out, they would be invariant with it. In fact, these representations would also have the capacity to untangle the factors of invariant from the data supplied. For example, an image inside a computer is composed of various sources of variations like object shapes, sizes, material and light.

What researchers and students find it most interesting is the sea of opportunities that this learning technique opens up for machines. In simple words, it is a training given to machines that grants them super intelligence, thus enabling them to deal with simple as well as complicated tasks without human guidance and supervision. Learning about it is great fun because the parameters within these neural networks comprise of several hidden layers. It might seem like a cumbersome process to muster, but thankfully studies and courses designed around it has made data indexing a lot less taxing to undergo. The process of learning starts with feeding sensory data to the first layer. The learning data from this layer is used to further develop the second layer. Through this methodical approach, the machine brain learns to identify, process and compile a lot of added information by the time the last layer is put.

Data processing and real-time analysis today has got deep learning to thank for its success!

There are two blogs which explain the ten deep learning usecases explaining the use of neural networks: